Concurrency is not what you think it is!

Understand the difference between concurrency and parallelism.

Many people come across this term when learning about multi-threading. We usually tend to think that concurrency means we can run two separate parts of a program simultaneously side by side. It makes sense to think of it that way, but what happens behind the scenes is something else.

Let's take a quick look at what concurrency is and how it differs from parallelism. I'll keep this article short, simple and to the point. Hope you enjoy it!

What does simultaneous mean?

Do you love taking long walks in the park with your friends? Well, I love it! We usually talk with our friends while walking along with them. We don't need to stop walking to be able to talk, and neither do we stop talking because we are already doing another activity, i.e. walking. This is exactly what simultaneous means. It refers to the ability to perform two tasks exactly at the same time and independently of each other.

But here's another question? Can you drink coffee and talk to someone, both at the same time? Well, at any given moment, you are either taking a sip or talking with someone. You can't do both simultaneously. But you can drink coffee and talk with someone concurrently.

Concurrency is the ability to do multiple things together in a given amount of time. But, at any given moment, either one of the two tasks is getting performed and not both. The processor switches between tasks so fast that it gives us the illusion of parallelism.

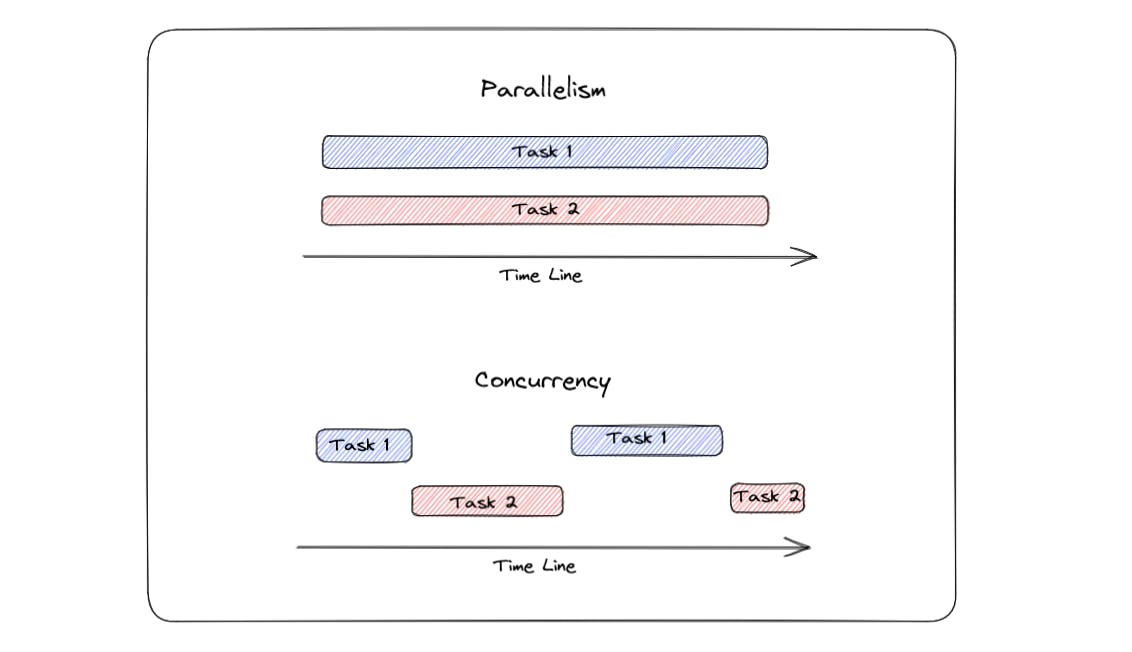

Here's an illustration to get a better idea of it:-

In parallelism, both tasks are getting executed exactly at the same time. i.e. Simultaneously.

In concurrency, both tasks are getting executed together, but at any given moment, either of the two tasks is getting executed and the other is waiting for its turn.

How can we achieve parallelism?

Now the question comes, when do tasks get executed parallelly and when do they execute concurrently? If your CPU has more than one core then different tasks/threads can be executed parallelly across different cores. But in the case of just one core, tasks get executed concurrently.

How does concurrency improve performance?

Let's say, we have two tasks to perform

Task 1 - To get data from a database and deliver it to the user

Task 2 - To perform some calculations on a piece of data.

Here's how the computer executes these tasks if we run them in different threads i.e. concurrently.

First, the processor is assigned to execute Task 1. Task 1 gets executed and requests the data from the database ( or any other external source of data ), while this I/O operation is getting performed, Task 1 has to wait for the data to arrive and only then it can deliver the data to the user. While Task 1 waits for the data to arrive, Processor gets assigned to Task 2 and it performs the calculations on the data that it was supposed to work on. Task 1 then gets executed when the data has arrived and sends the data to its destination.

This way, the processor didn't wait for Task 1 to finish completely and then move to Task 2, rather it utilized the I/O bound time to perform Task 2. This is how concurrency improves performance. Concurrency improves speed by overlapping the I/O activities of some tasks with the CPU activities of another.

Conclusion

We often use the word concurrency and parallelism interchangeably but under the hood, both of them work completely differently. I hope that I was able to present a clear picture of how both concepts differ from each other and how they work internally. Thanks for reading it to the end 😊.